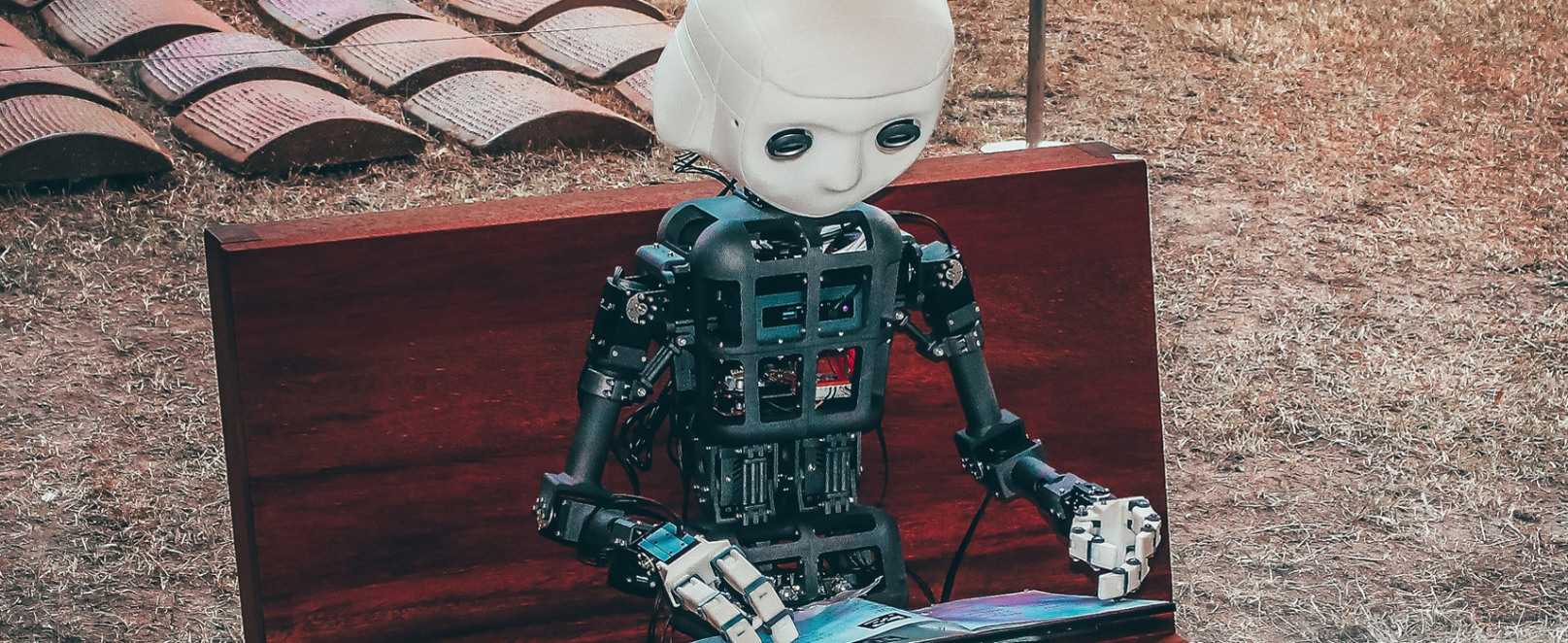

“Confirm Humanity”, the input mask asks before one is allowed to subscribe to a mailing list. “I’m not a robot” is what you are supposed to click.

TEXT Matthias C. Kettemann, Humboldt Institute for Internet and Society

The irony grows when you consider that here a human is confirming to a machine (strictly speaking: an algorithm-based communications application) that they are not a machine. Why, one might ask, are only humans allowed to subscribe to e-mail lists? And what does this have to do with the fundamental questions of our society?

After the initial euphoria about the potential of artificial intelligence, the realization has dawned that machines can get things wrong. Study after study proves it: Algorithms discriminate. However, they were also developed for this purpose: to differentiate. What is important, however, are criteria by which to differentiate, by which human and non-human decision-making mechanisms (are allowed to) influence each other. And what’s more: Even the selection of criteria as well as the attitudes and ideologies behind the selection of criteria are not objective. And the amount of data used to drive automated learning are all the more unobjective simply because they are large. Indeed, since it is almost always historical data, it is burdened with the ideas and beliefs of the past.

Guidelines for Automated Decisions

The call for guidelines for algorithms, their development, and use is therefore justifiably growing louder; also for the right to be subject only to human decisions; for the right not to communicate with social robots (social bots) without realizing it; for the prohibition of automated decision-making systems in many social subsections – especially in the constitutional state; for insight into the logic of automated decisions (already enshrined in current law).

In short, it seems that while on the one hand the digitalization of all areas of life continues, with media convergence and the Internet of Things making our cell phones, newspapers, and refrigerators targets of hacker attacks, the societal forces of inertia are gaining strength. These forces are partly fed by actual threats, but they are also ideologically charged as a fundamental criticism of progress and technology.

We search with Google, but Google also searches us; we use social networks, but social networks also exploit us. Drawing red lines for the technology of human-machine interaction in an ethically defensible and legally tenable way is difficult in times of opposing approaches to prevention policy and technological progress. For this reason, we need to take a radical look at the fundamental question: How can digitalization be designed in an ethically optimal way?

What Rules Does Artificial Intelligence Need?

First, ethics: Ethics helps us to act properly. Ethical rules and laws shape the way we live together, help us avoid conflicts, protect rights, and contribute to social cohesion. Of course, rules are subject to a constant process of change. Particularly in the field of high technology, ethics and laws must follow suit as technical applications advance. The questions we have to ask ourselves are complex: What are the criteria needed for the programming of chatbots so that they communicate fairly, i. e., without unjustified discrimination? What rules must apply when programming artificial intelligence so that it serves the good of all? How do we design the algorithms that shape our society?

Researchers at the Alexander von Humboldt Institute for Internet and Society are investigating these questions. Supported by the Stiftung Mercator, they are showing how digitalization can be made fairer. They have support from the highest level: The project is being carried out under the patronage of President Frank-Walter Steinmeier, and the project’s fellows have already been guests of the German head of state twice. Among other things, they showed him that digitalization must always focus on people – something the president approved of enormously. However, the researchers at HIIG also show how research can be improved. They have shown that innovative science formats, so-called research sprints and clinics, can quickly produce vital knowledge that can then help policymakers develop better rules.

Using innovative scientific formats, the fellows were able to provide the ethics of digitalization with an important update. In research sprints and research clinics, they worked out ways to legally screen algorithms for discrimination content. They were able to identify what researchers need to know to effectively evaluate the algorithmic moderation of content on platforms (more data, especially raw data, is important; relying only on what platforms say is not enough). These findings are important and align well with the current European legislative agenda. More insight into the logics of using algorithms (and auditing them) is an important element of both the Digital Services Act and Digital Markets Act.

The Right to Justification

In other projects, research sprints, and research clinics, HIIG fellows at Harvard University’s Berkman Klein Center cooperated with the Helsinki City Council to make the use of artificial intelligence in the public sector legally compliant. Incidentally, a great deal is currently happening in the area of platform research: Research infrastructures are currently being established at several institutes to keep a close eye on the platforms. At HIIG, the Platform Governance Archive is making the historical terms of use of platforms searchable. At the Leibniz Institute for Media Research | Hans Bredow Institute, the Private Ordering Observatory is investigating private rule-making. Whenever an actor – be it the state or a company – has rights and obligations and assigns goods or burdens, this decision must be explainable and justifiable. All those affected by these decisions have a right to know how these decisions were made. And that is especially true when algorithms are involved.

The Frankfurt-based philosopher Rainer Forst has called this the right to justification. This right applies offline as well as online, even if the justification can be more concise depending on the situation and the decision-making processes can be faster. The project has also shown that a careful governance of the way in which these justifications are communicated is always required.

What applies to algorithms was also demonstrated during the coronavirus pandemic: Only when rules and decisions are understood are they perceived as legitimate. Algorithms are being used in more and more areas of society. A central task of an ethics of digitalization is therefore to pay attention to the protection of human autonomy and dignity in such a way that our right to understand what is happening (to us) and why remains protected.

Programme tips

3. NOV 19.00, hybrid, Deutsch

Airbnb, Uber, Lieferando: Die Zukunft der Wirtschaft?

ORGANISER

Einstein Center Digital Future, Humboldt Institut für Internet und Gesellschaft, Weizenbaum- Institut

4. NOV 15.00, digital, Deutsch

Menschliche und Künstliche Intelligenz Spielerisch verstehen

ORGANISER

University of Surrey

6. NOV 18.00, digital, English

Unsolved Problems in the Study of Intelligence

ORGANISER

Cluster of Excellence — Science of Intelligence

7. NOV 11.00, hybrid, English

ETH Global Lecture

ORGANISER

ETH Zürich

Prof. Dr. Matthias C. Kettemann works at HIIG in the management team of the project on the ethics of digitalization and teaches innovation and Internet law at the University of Innsbruck.

Erschienen im Tagesspiegel am 15.10.2021